Artificial Archeology

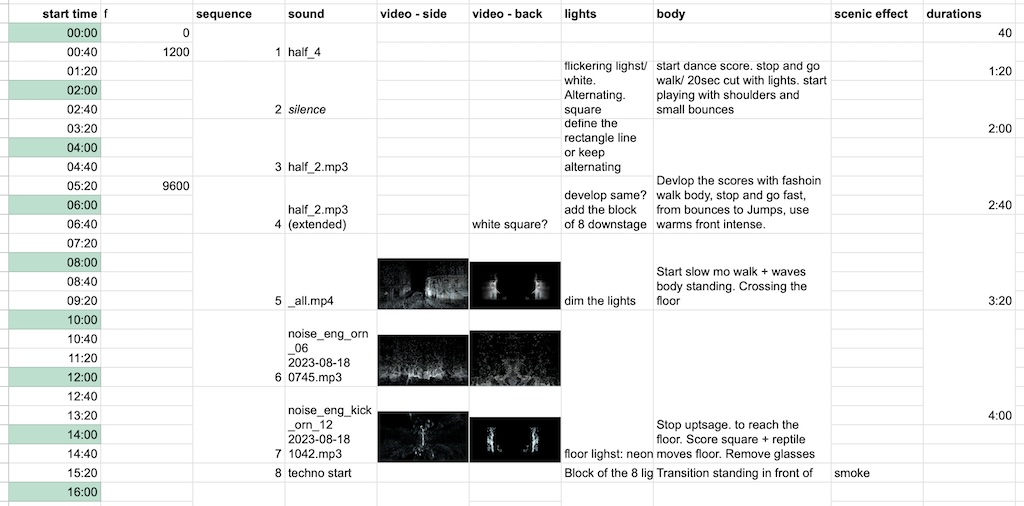

See the trailer at Tanzforum Berlin.

Intro

Artificial Archeology, a performance by Lucas Kuzma and Christine Bonansea, uses the artistry of dance and the innovation of technology to take us on a journey through the realms of space and time. Reminiscent of Bachelard’s “Poetics of Space,” it delves into the intimate and profound connections between our lived experiences and the vast expanses of the universe, evoking a mesmerizing exploration that transcends the conventional boundaries of perception.

Kuzma uses LiDAR scans, generative AI, and traditional generative techniques to reinvent and remap cityscapes, blending our past, our present, and a post-human future. The project invites us to reconsider the very architecture of time. Here we document some of the technologies and techniques used in the project.

Scanning

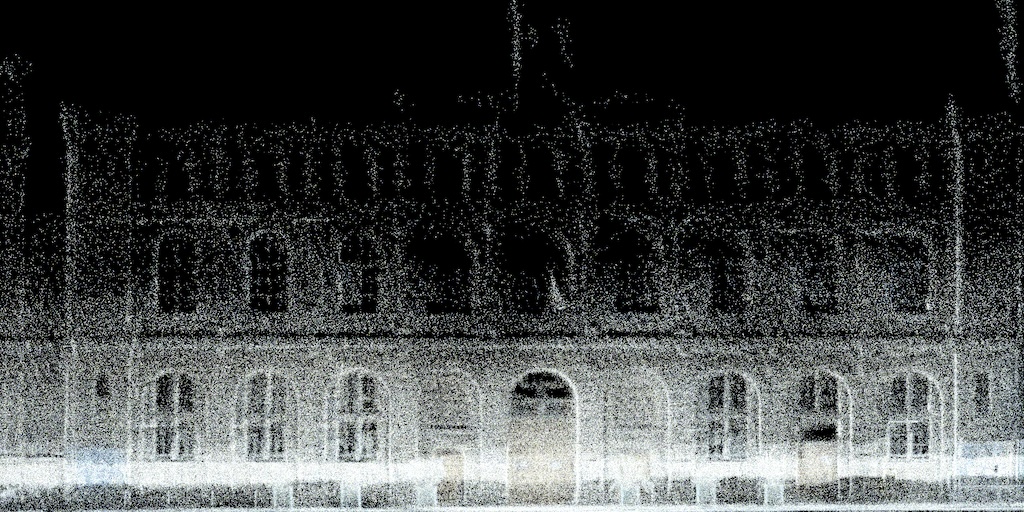

The piece begins with a laser scan of Parisian architecture, with a long, frontal projection on the side walls, and a square longitudinal view of the same. Since we are working with a point cloud, we are able to generate this orthogonal projection, which appears as an x-ray uncovering the city’s innards, but also unwrapping its layered past.

Frontal scan

We slowly pan across the scan, slicing through the building, exploring and exposing its hidden innards.

Longitudinal scan

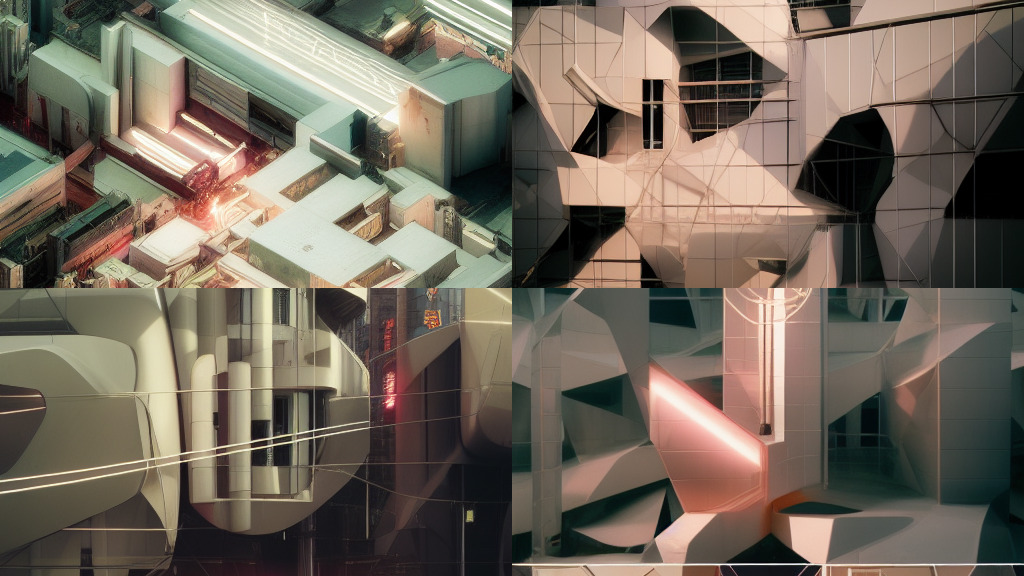

Reconstruction

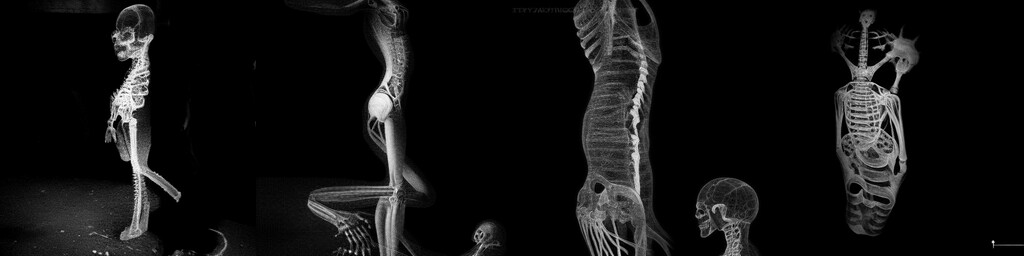

We then task Stable Diffusion with interpreting the findings, prompting it to discover catacombs, closeted skeletons, archeological artifacts.

The interpretations are swapped in and blended with the original scans, with a steady tempo which periodically doubles, creating a sense of acceleration, mirroring the rapid technological changes we are witnessing now.

The Stable Diffusion prompts, strength, and seeds are also rhythmically altered, matching the audio track.

Deconstruction

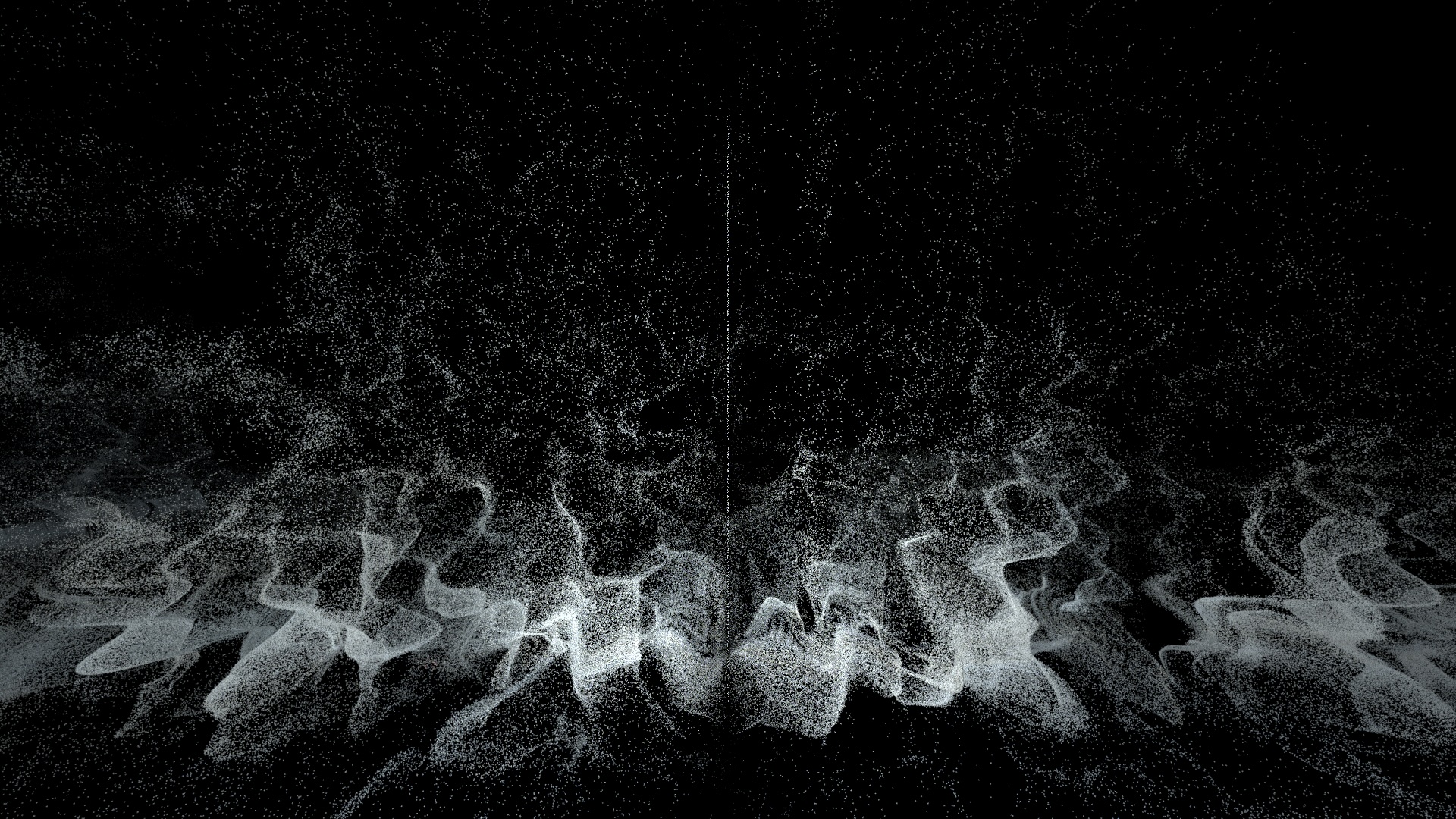

As we move forward through time and space, wave-like disturbances are generated, just as the ineluctable force of gravity bends both time and space.

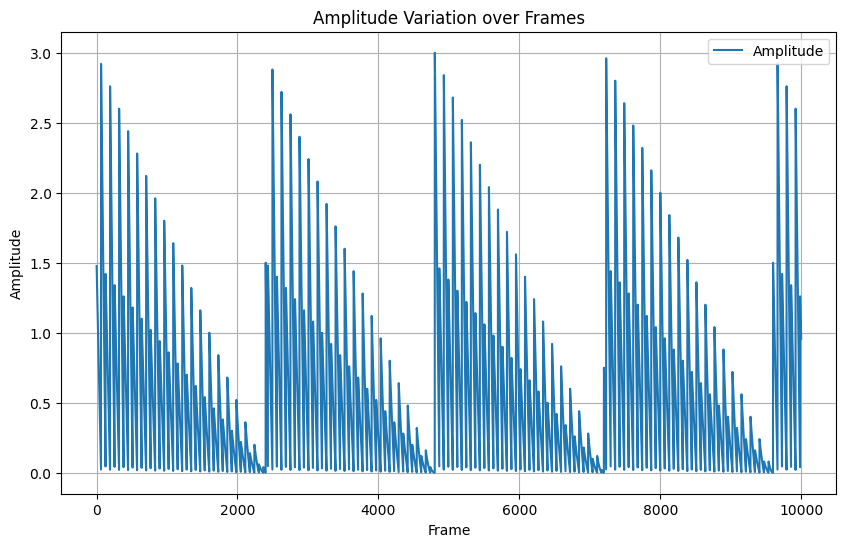

The waves are generated in Houdini, with custom Python scripts used to spike and decay the disturbance amplitude.

import math

node = hou.pwd()

geo = node.geometry()

"""

Periodically spike a node parameter to `max` level, and decay over course of period.

"""

node = hou.node("/obj/geo1/perlin")

parameter = node.parm("amplitude")

frame = hou.frame()

period = 64

max = 3

last_spike_frame = math.floor(frame / period) * period

# how about varying max over time?

max = max * (1 - (frame % 2400) / 2400)

# alternate big and small spikes

if last_spike_frame % (2 * period) == 0:

max *= 0.5

if frame % period == 0:

# spike noise amplitude

parameter.set(max)

else:

# decay noise amplitude

distance = frame - last_spike_frame

if distance <= period:

parameter.set(max * (1 - distance / period))All wave modulations and temporal phenomena in general are mapped to a grid. This allows us to synchronize all audio perfectly, down to the individual frame.

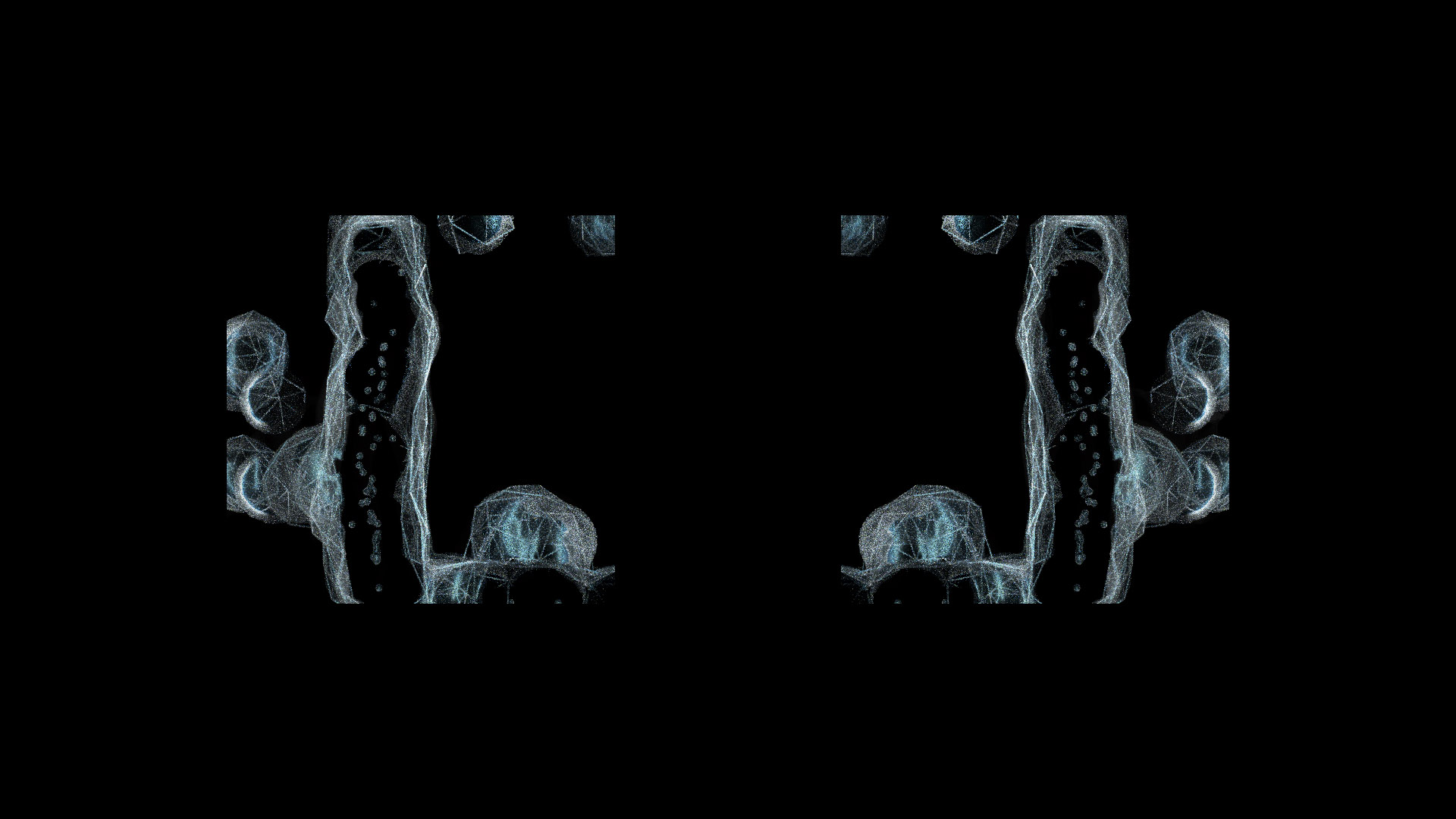

Tunneling

Reminiscent of the hidden, parallel world trope often used by Murakami, we enter a cavernous world, moving beyond our present time into potential futures.

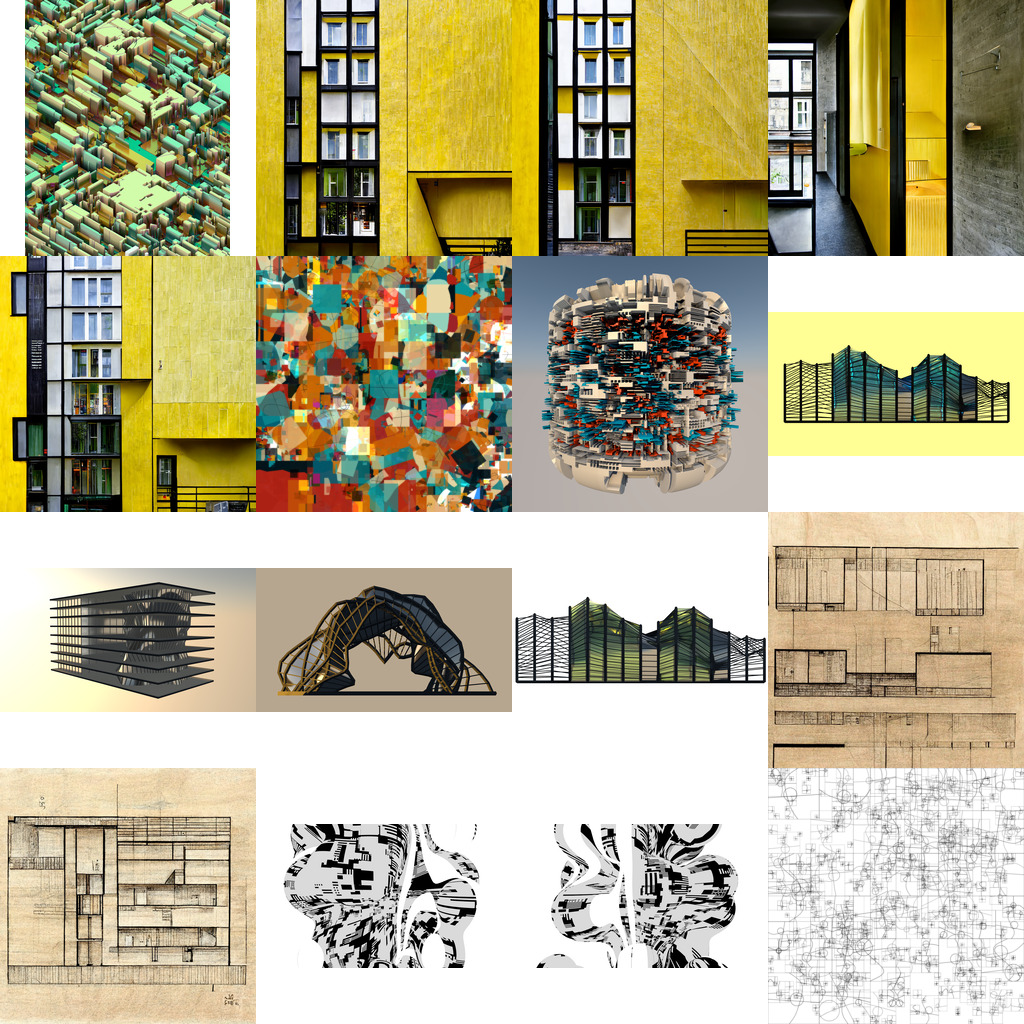

Bricolage

Here we use custom Python scripts to weave together a series of collages, wherein we lean on AI to predict some possible scenarios. Will our future be dystopian or utopian?

Dystopia

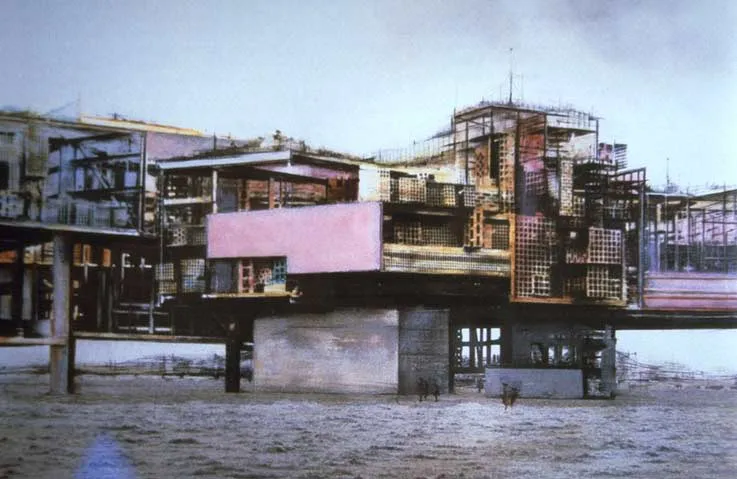

For the dystopian sequence we began with a set of architectural images, generated with various diffusion algorithms, traditional modeling in Cinema 4d, as well as our own generative scripts, both using Python and P5.js.

Of note is this somewhat fractal approach, using Processing to draw self-similar geometry at various scales. The outputs are not that interesting in themselves, but they lend well to greebling models or as sources for later diffusion.

Try the P5.js sketch.

The resulting collages are then layered to create parallax effects, with an added ominous stutter, created with a simple After Effects script.

// horizontal motion based on current frame, with stutter for first 4 of every 32 frames

var speed = 1.2;

var currentFrame = Math.floor(timeToFrames(time));

if (currentFrame % 32 < 4) [Math.floor(random() * 20) * 682 - 256, value[1]];

else [currentFrame * speed, value[1]];Utopia

The utopian world was inspired by ideas from Constant’s New Babylon, where cities gave yield to autonomous networked clusters, optimized for the creation of art and for play rather than commerce.

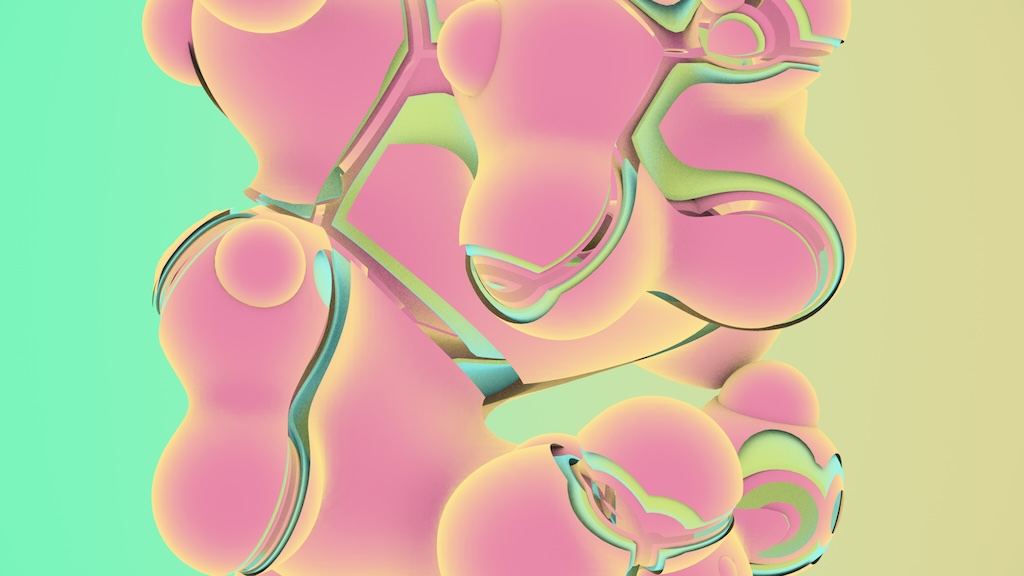

Mesh geometry was generated using traditional generative techniques, which were then fed into Stable Diffusion.

The results were combined with hexagonal masks and collaged as above. The hexagons further play on notions of peaceful communities and natural habitats, recalling the work of bees.

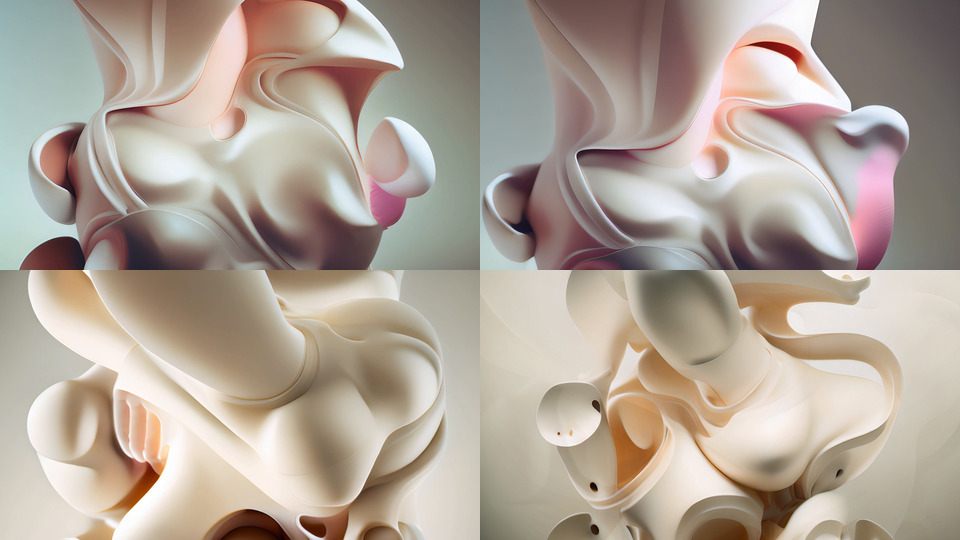

Post-Human

For the final segment, we move beyond humans and their architectural needs, envisioning a world of pure form and eventual formlessness. Once again, we rely on traditional 3D techniques to create sliced metaballs, which then get fed into Runway pipelines, creating beautiful animated abstractions.

In the finale, our audience is invited to share the stage with the performer, watching as our organics accelerate into transcendence. And into the stars.

Neurons

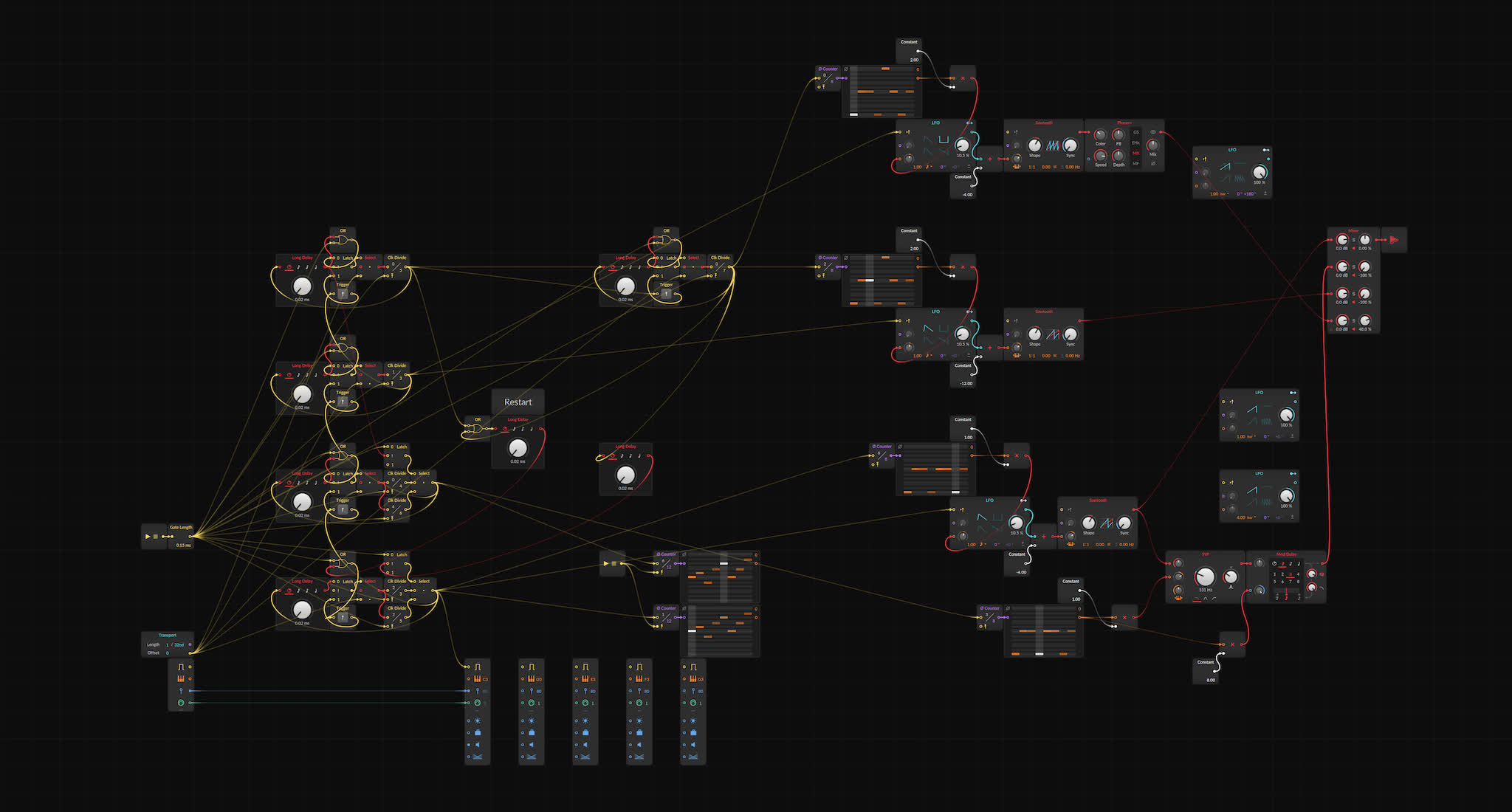

Audio for the piece was laregely scored using Bitwig. Here nodes modelled following a basic threshold neuron model were responsible for the audio sequencing. The result is very much like a Bitwig Grid simulation of Soma’s Ornament 8.

Recordings

A DVD of the entire performance as documented by Tanzforum Berlin is available for viewing exclusively in the reference collections of the following archives (at media desks in these institutions):