CAD Extrusion

In a recent post, we used Stable Diffusion to explore industrial design based on flat vector images. In this post, we will delve into the third dimension, using ControlNet depth and normal models.

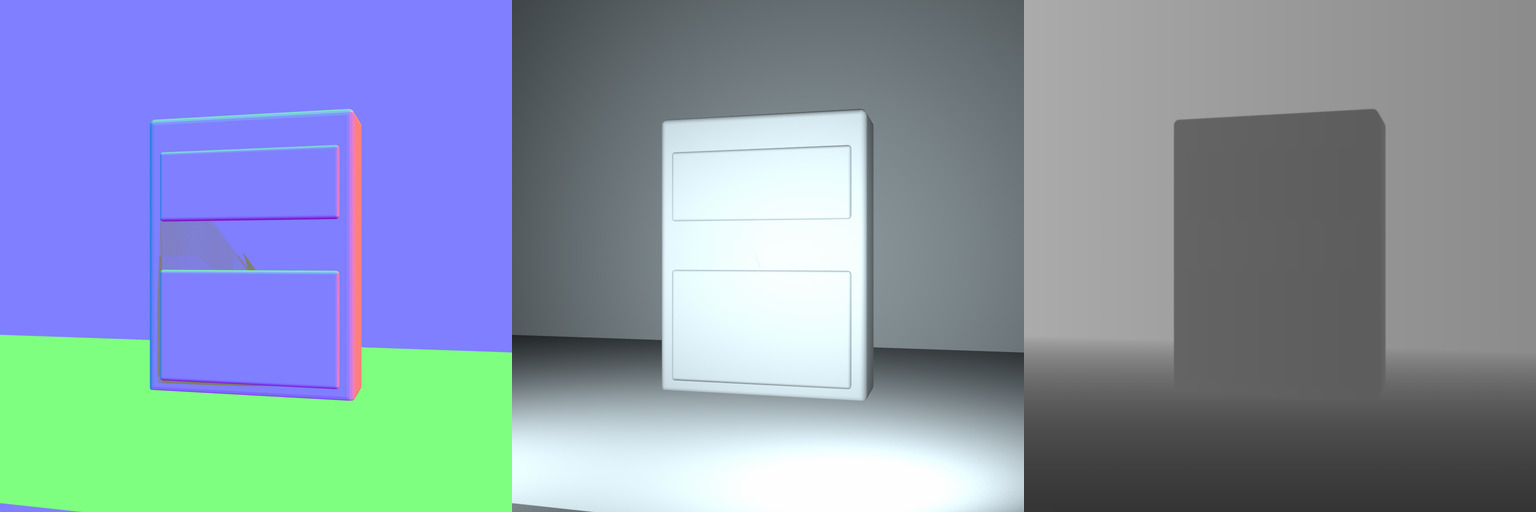

Let’s start by making a simple box with a couple panels, lit with a couple lights. The image below was made with Houdini and rendered with Octane, but that is serious overkill given what these applications are capable of. In any case, the box was rendered using multiple passes in order to generate surface normals, the actual render, and a depth image. If you are not familiar with rendering, just know that the normal render uses colors to show the angle at which light hits our box.

As before, we will use the Stable Diffusion web UI with the ControlNet extension to generate our images.

To better control the output, we will go with img2img rather than purely prompt generated imagery. We use default settings for just about everything, but bump up denoising strength to 0.8, as this gives the model more leeway. Given our fairly boring input, lower settings result in boring images.

We use ControlNet in normal, depth, and Canny modes, supplying the appropriate images for source.

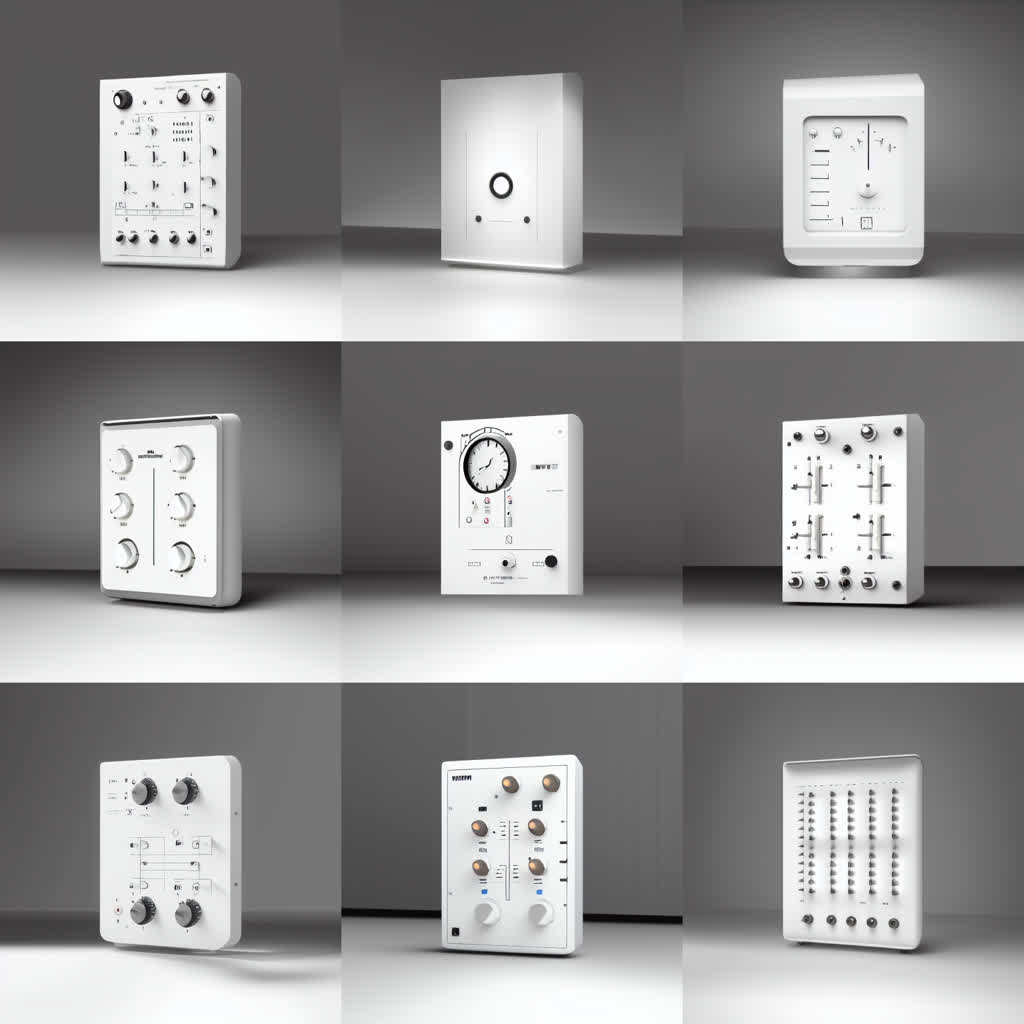

Interestingly, the Canny model preserves the 3D look, replete with shadows and reflections along with a fairly consistent background. The actual devices here do look a bit flat compared to the depth and normal versions, but it is hard to generalize about any of this when the prompt and seed have such a massive impact on the output image.

Cranking up denoising strength to 1.0 (why is there no 1.1?) we still manage to remain quite faithful to the original render.

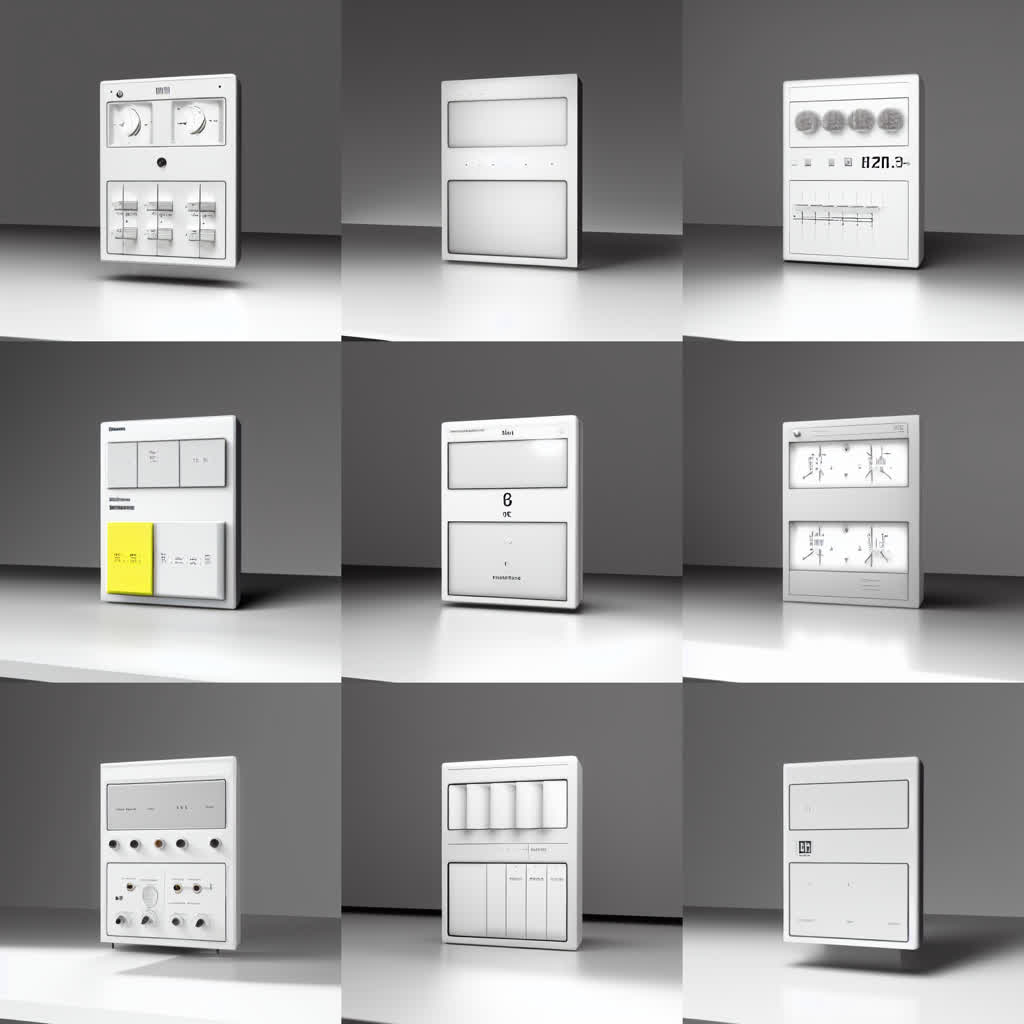

For comparison, here is the output using only the prompt with normal ControlNet and no source image.

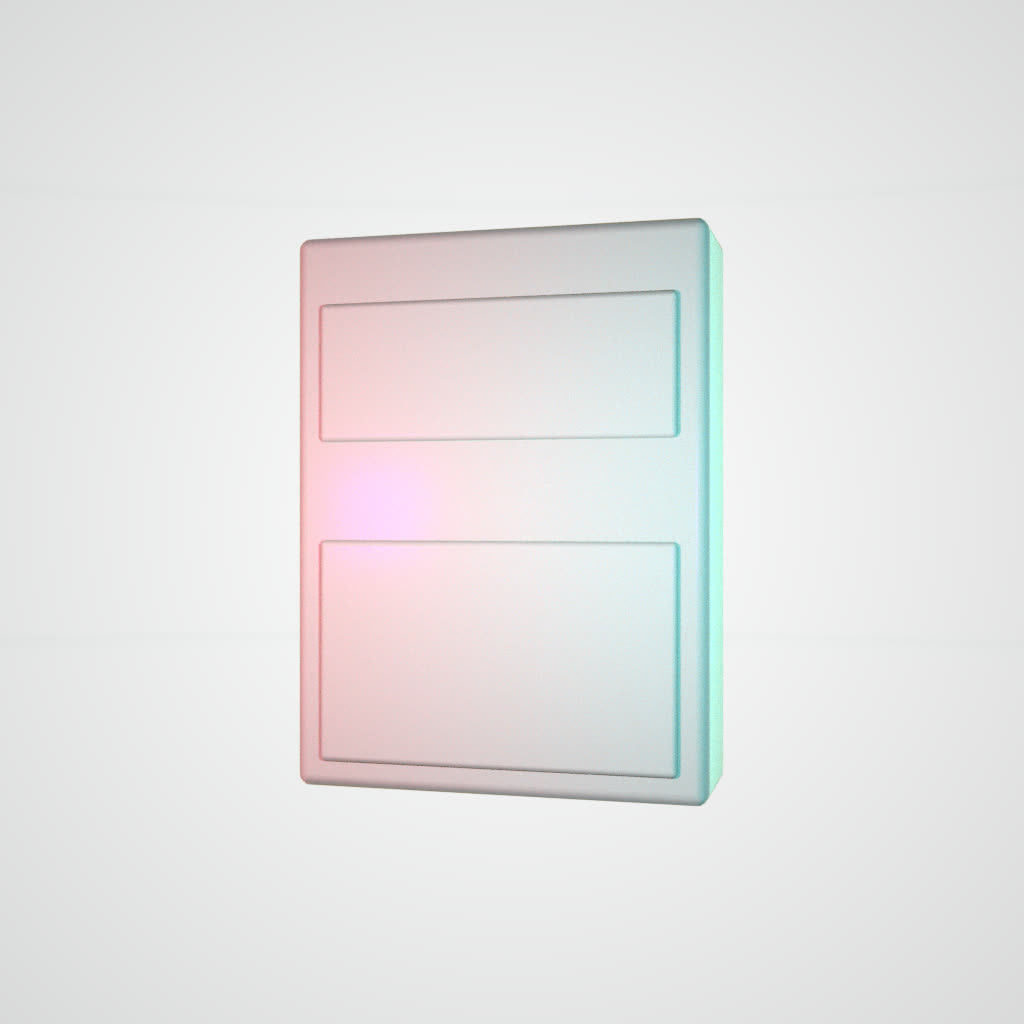

We can try to introduce a bit of color, going back to our original 3D model and adding a surface material with thin film refraction along with slightly colored lighting, making for some subtle color gradients.

To get any of the original color to show through, however, we have to lower the denoising strength to somewhere around 0.65. Even here, the model is pretty liberal with its color renditions.

Normal • 0.65

Depth • 0.65

Canny • 0.65

An unfortunate consequence of lowering the denoising strength is that we are back to far flatter and simpler images. It seems that with this set of tools, our control over color is still quite limited.

Adding a hint of a knob to the render, along with some animation, we get this fun exploratory video. Note that the seed is held constant, which keeps the animation somewhat on track, but the results are still too diverse for a smooth result. Here, again, we could lower denoising strength for more consistency, but then the result will not be as interesting.